Kwil Network Performance Metrics

Summary

Kwil delivers predictable and reliable performance at a range of transaction sizes, block sizes, and concurrency. This document provides an overview of the performance you can expect and establishes baseline, real-world metrics for Kwil database networks.

For Kwil, the key factor that constrains performance relative to traditional databases is the requirement for nodes to achieve practical byzantine consensus. Under a variety of conditions, this report tracks:

Data Ingress Rate: A network’s data ingress capacity, measured in GB/day.

Throughput Utilization: The amount of data that a network can process per day divided by the theoretical limit.

Transactions per Second (TPS): The amount of transactions that can be processed per second.

Average Time to Finality: The time needed to finalize a block.

Summary

Test Conditions and Methodology

Each test was conducted on a 55 node testnet (50 validator nodes and 5 non-validating peer nodes). Nodes are geographically dispersed, with 11 nodes in Western United States, 22 nodes in Eastern United States, and 22 nodes in Central Europe. Each node runs on a separate AWS c5d.4xlarge machine. In each test case, all network requests are sent to non-validating peer nodes, which gossip transactions to validator nodes.

Each test scenario tests the performance at different transaction payload sizes (independent variable) and generates enough transactions to fill an entire block. Tests 1 through 6 use the default Kwil configuration of 6 MiB block size and 6 second block timeout commit (the configured target block time). Tests 7 and 8 evaluate block size as the independent variable, adjusting to 12 MiB and 18 MiB, respectively.

Results and Commentary

In tests 2 through 8, the limiting factor across all metrics is the BFT consensus engine’s capacity to reach consensus on the transactions. In test 1, the limiting factor is all other overhead for processing transactions on a node (e.g. PostgreSQL execution, P2P Gossip, etc.), as 1 kB transaction payloads lead to nearly 6000 transactions per block.

Data Ingress Rate & Throughput Utilization

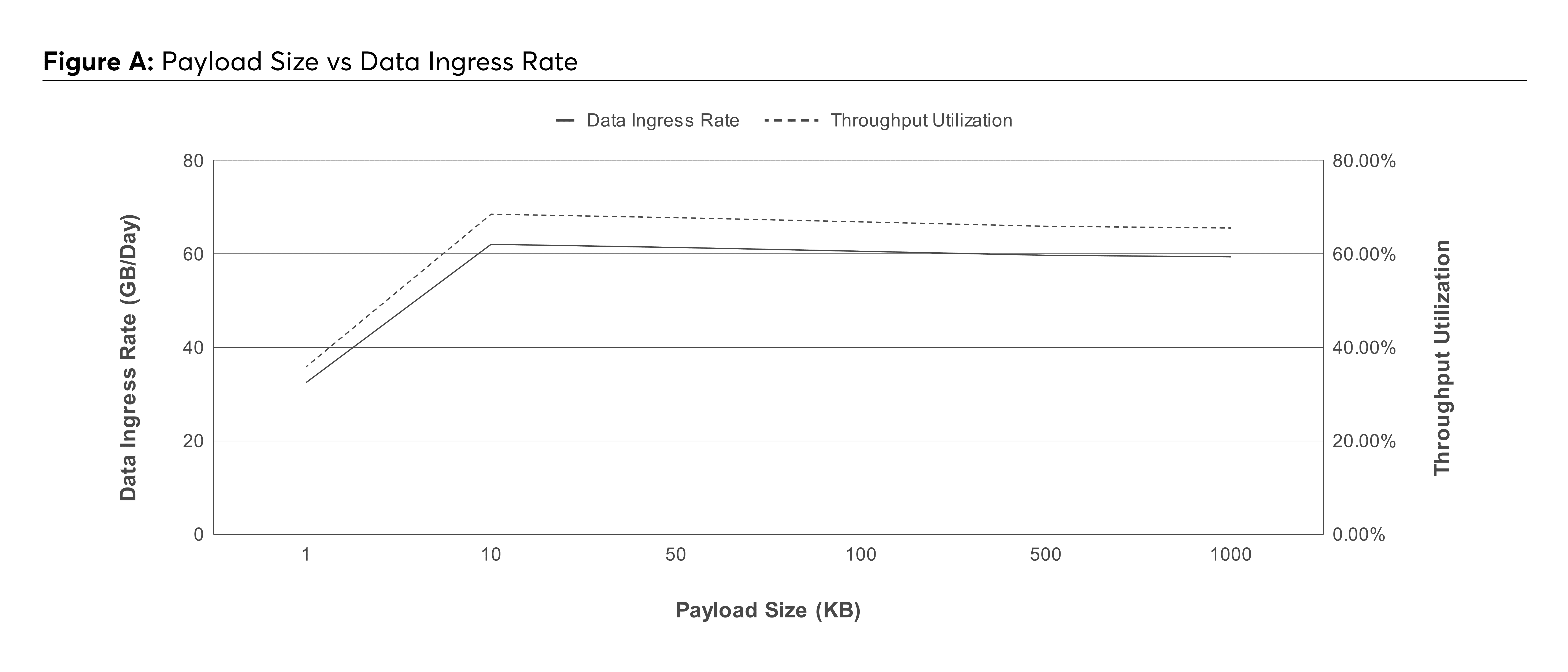

In Figure A, the data ingress rate was consistently around 60 GB / day and the observed throughput utilization was around 66%. At 6 Mib block sizes and 6 second block times, the theoretical data ingress limit is 90.6 GB / day. The reason for the observed data ingress rate being lower than the theoretical limit is latency between nodes during consensus rounds. The theoretical limit assumes no latency between nodes, but factors such as physical distance, routing, server performance, etc. affect the actual latency nodes incur when completing the consensus process.

In the first test case (1 kB payload body per transaction), data ingress and throughput performance are constrained by the processing overhead in all parts of the system. Factors such as the consensus engine, P2P gossip, PostgreSQL execution, and others have a higher overhead when executing more transactions per block.

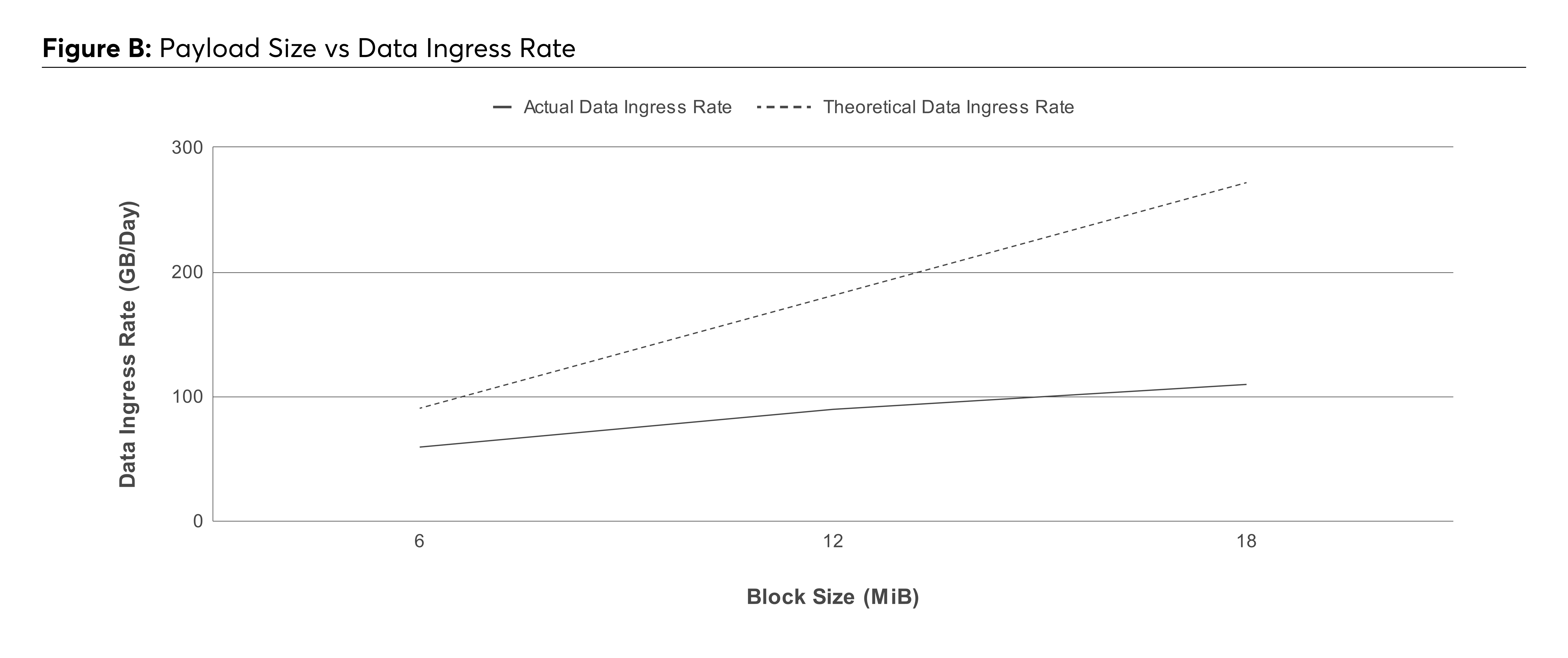

In Figure B, increasing block size results in a higher data ingress rate; however, throughput utilization diminishes due to the corresponding increase in the network data transfer and each node’s computational load at each consensus round.

Data Ingress Rate & Throughput Utilization

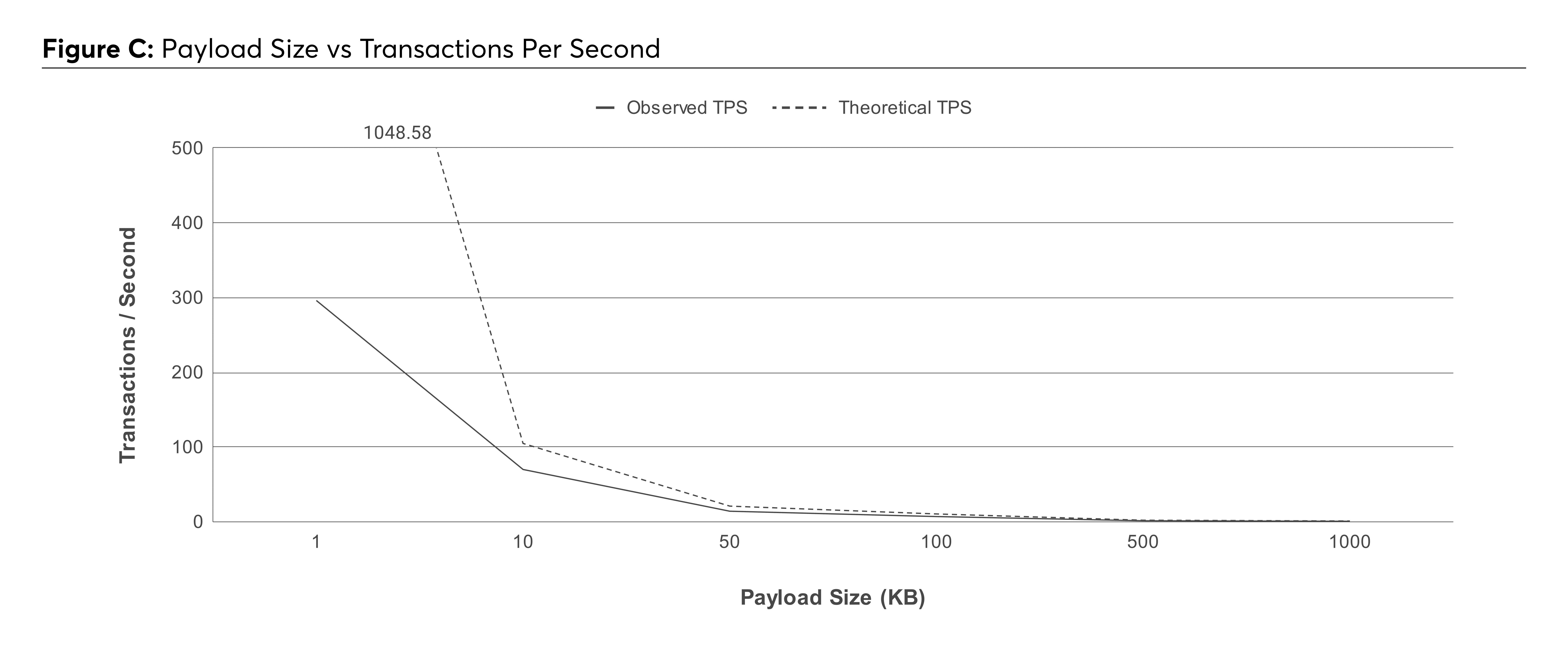

In Figure C, the Transactions Per Second (TPS) approached the theoretical TPS in all test cases except the first. The theoretical TPS is calculated as the block size divided by the transaction payload size, further divided by the block timeout in seconds (e.g., 6000 kB block size / 10 kB transaction payload size / 6-second block timeout = 100 transactions per second). As observed with the data ingress rate, the actual TPS fell slightly below the theoretical limit due to latency between nodes during consensus. For test case 1, which involved 1 kB payloads, the discrepancy between observed and theoretical TPS was primarily attributed to processing overhead from the large transaction volume.

Average Time To Finality

In Figure D, average time to finality closely approached its theoretical limit of 6 seconds, except in the first test case. The theoretical limits are determined by each chain's block timeout, typically set at 6 seconds. The deviation in the first test case was due to the additional processing overhead in all parts of the system that results from the large transaction volume due to small payload sizes.

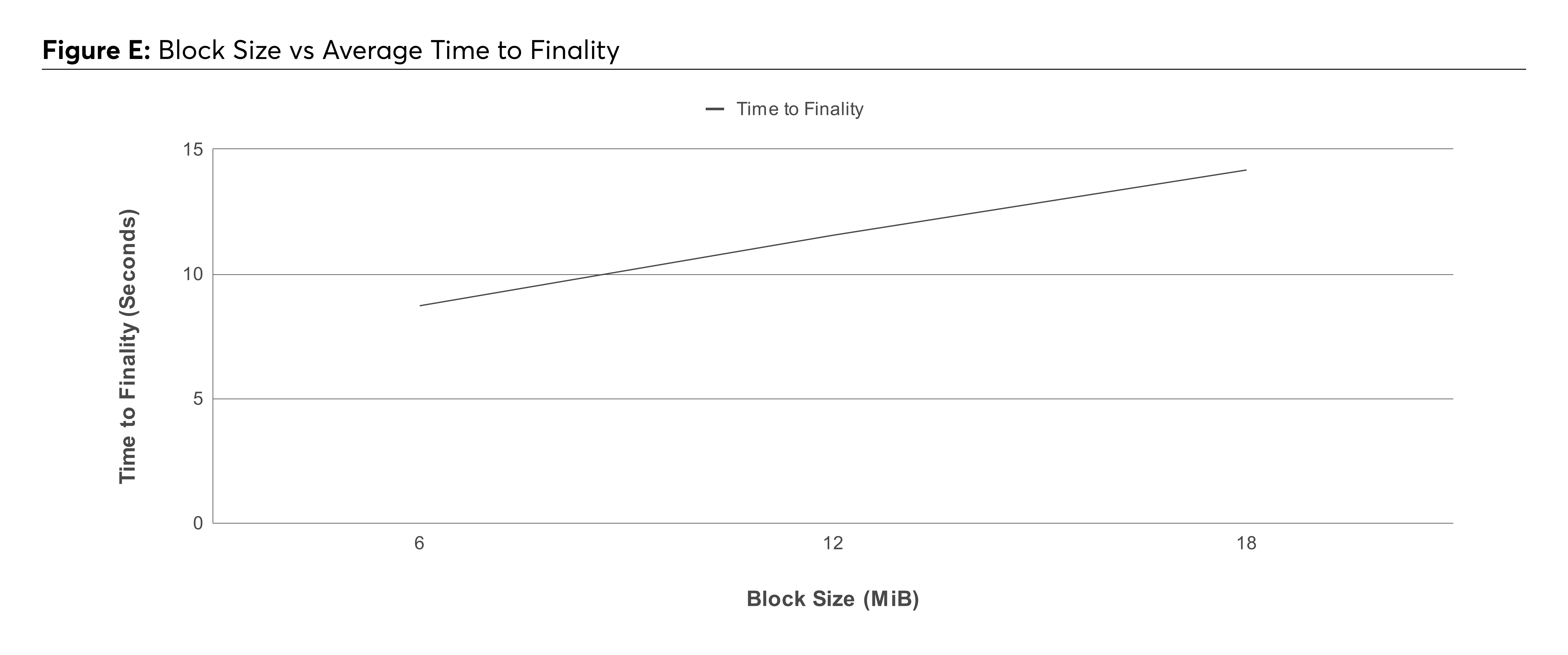

In Figure E, although the block timeout remained at 6 seconds, increasing the block size led to a decrease in Time to Finality. This performance decline was primarily caused by increased latency between nodes during consensus rounds and the additional data requiring consensus due to the larger block sizes.

Conclusion

This report details performance benchmarks for Kwil Networks, allowing prospective and current users to assess if Kwil is the right solution for their use case and configure their networks for optimal performance. While networks generally operate close to their theoretical limits for all metrics, some discrepancies were observed on networks processing a high volume of small payloads (1 kB or less).

It is important to note that actual performance can vary based on several factors, including the number of validators, their geographic distribution, and the hardware specifications of the machines running the nodes.

These performance metrics demonstrate Kwil’s capability to efficiently handle varying transaction sizes and payloads, maintaining predictable performance across a variety of scenarios and use cases.

Appendix A - Terminology

Block Size

The maximum amount of data that can be included in a single block in the network’s consensus. Each block contains transactions to be executed on each node’s PostgreSQL instance, as well as other important information such as timestamp and a reference to the previous block.

Block Timeout

The maximum duration the network waits to gather and validate transactions before producing a new block.

Concurrency

The ability of the system to handle multiple users submitting transactions concurrently. In Kwil, transactions are executed sequentially and are ordered during the consensus process.

Consensus Rounds

The process through which network validators agree on the validity of transactions and the order in which they are added to a block.

Data Ingress

The rate at which validators can reach consensus on data entering into a network.

Finality

The state of a transaction being confirmed via the network consensus process.

Gossip

A communication protocol used by nodes to share and propagate information, such as transactions and blocks.

Non-validating Peer Node

A type of node that does not participate in the consensus process. These nodes do not validate transactions or blocks but can still relay information, store network state, and assist in data dissemination.

Transaction

A record of an operation, such as a CRUD operation on a PostgreSQL database or a vote for a new validator to join the network.

Validator Node

A node that participates in the consensus process by verifying transactions and blocks.